AI Agents and the Death of SOLID

I spent years internalising SOLID principles. But after months of writing code with AI agents every day, I've started wondering: who were they actually for?

I've been writing code with AI agents every day for months now. Claude Code is my pair programmer, my refactoring tool, my rubber duck. And somewhere along the way, I stopped caring about SOLID.

Not because I forgot what it means. I spent years internalising Single Responsibility, Open/Closed, all of it. Years ago I attended a workshop with Sandi Metz in London. The Sandi Metz, author of Practical Object-Oriented Design. I spent days working through refactoring exercises, learning to spot code smells, training my eye for clean object-oriented design. Her book genuinely changed how I thought about writing software. These principles were drilled into me as the mark of a professional developer.

But lately I've been wondering: who were they actually for?

Principles built for human brains

SOLID, clean code, Sandi's rules, Martin Fowler's refactoring catalogue. These weren't handed down from some universal law of computing. They were invented to help humans work with code. Humans who can hold about seven things in working memory. Humans who need to read a method name and understand what it does without reading the implementation. Humans who work in teams where someone else will maintain what you wrote six months from now.

The entire philosophy of code quality, when you strip it back, is about compensating for the limitations of the human brain.

What happens when the brain changes?

I work with Claude Code on Elixir projects daily. Here's what I've noticed about how I write code now versus a year ago:

Shotgun surgery doesn't scare me anymore. In the old world, a change that touched 50 files was a code smell: shotgun surgery. It meant your abstractions were wrong, your responsibilities were scattered, and some poor developer was going to spend a day tracking down every place that needed updating. We restructured entire codebases to avoid it. But Claude Code will happily update a thousand files in one go and not miss a single one. The thing we spent years architecting around just... stopped being a problem. That's a strange feeling.

I stopped obsessing over DRY. If Claude needs to modify a function, it's cheaper for it to have all the context right there than to chase abstractions across five files. Duplication that would have made me twitch before is now helpful. It keeps things self-contained within a context window.

Long methods? Feature envy? God objects? Half the classic code smells from Fowler's catalogue feel different now. A long method that does too much? Claude can read and modify it just fine. It doesn't get tired or lose track halfway through. Feature envy, where a method uses another class's data more than its own? The AI doesn't care about class boundaries the way we do; it sees the whole codebase at once. Even god objects, the ultimate sin: an AI agent can navigate a 2,000-line class without breaking a sweat. These smells were warnings about human cognitive overload. Remove the human from the loop, and they stop smelling.

I care less about clever abstractions. A beautiful inheritance hierarchy or a perfectly composed set of behaviours? Claude doesn't appreciate the elegance. It actually works better with explicit, flat, slightly verbose code. Magic and convention-based patterns that save me typing actively confuse the AI.

I've started preferring declarative over imperative. Ash Framework leans heavily into this. You declare what a resource does rather than writing the how. It turns out describing what you want is much closer to how you prompt an AI than step-by-step instructions are.

The new quality criteria

I'm not arguing for lower quality. I'm noticing that the definition of quality is shifting. If I were to write down principles for the AI era, they'd look something like:

- Explicit over implicit: convention-over-configuration is hostile to AI agents

- Self-contained over DRY: duplication is cheap when AI writes it; context-switching is expensive

- Flat over nested: deep call stacks eat context windows

- Declarative over imperative: describe the what, let the tooling figure out the how

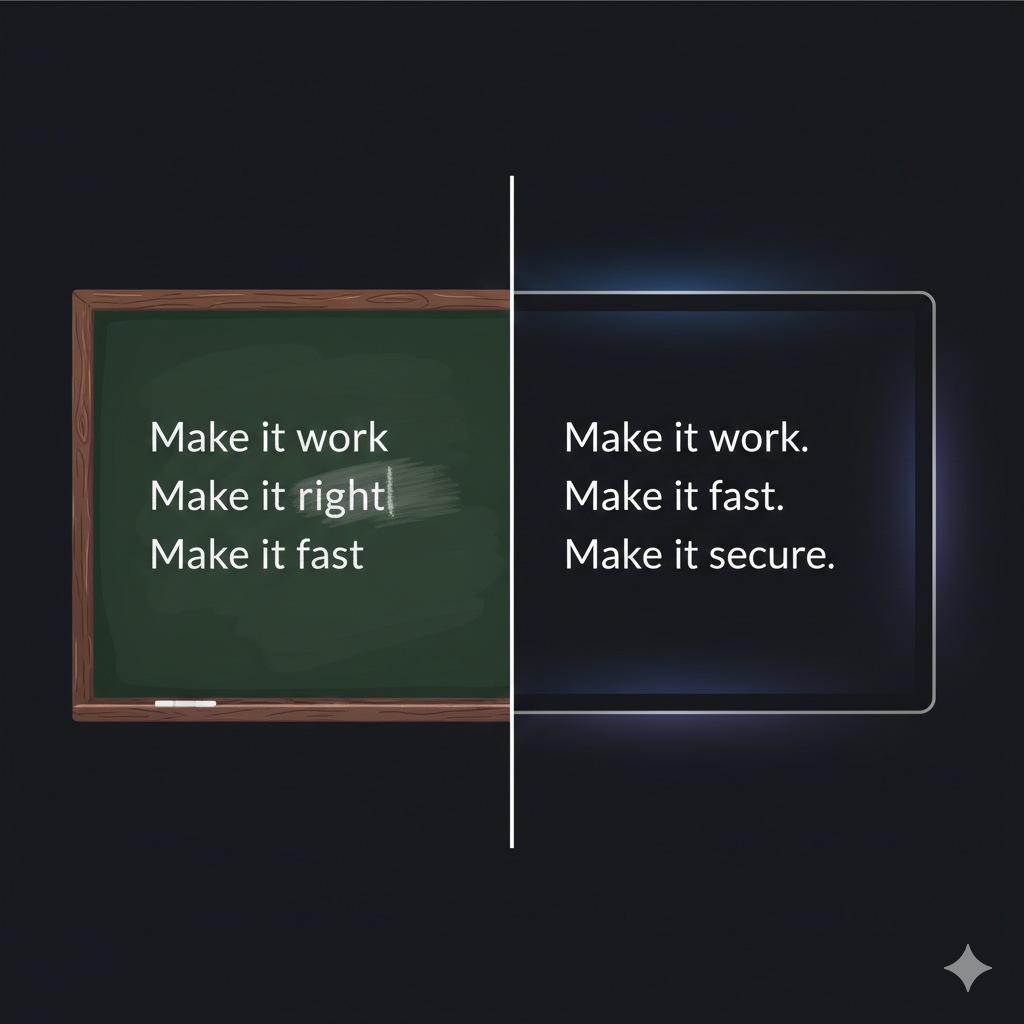

There's an old saying: "Make it work, make it right, make it fast." The idea was that you'd iterate. Get something functional, then clean it up, then optimise. But "make it right" always meant making it readable, maintainable, well-structured for humans. With AI agents, "make it work" and "make it right" are collapsing into a single step. Claude doesn't need a messy first draft followed by a careful refactor. It just writes the thing. Which means maybe the new version is simpler: "Make it work. Make it fast. Make it secure."

And honestly, maybe we should be spending less time debating code organisation and more time on things that actually matter to users: performance and security. No one ever thanked you for a perfectly SOLID codebase. They notice when the page loads in 200ms instead of 3 seconds. They care when their data doesn't get leaked. The old quality conversation was so focused on how code reads that we sometimes forgot to prioritise how it runs. If AI agents free us from obsessing over structure, maybe that's time we can redirect toward the stuff that actually ships value.

These aren't the opposite of good engineering. They're just optimised for a different reader.

The honest caveat

I'm not ready to throw SOLID in the bin entirely. We're in a hybrid period. I still review AI-generated code. I still debug it. I still need to understand what happened when something breaks at 2am. There are regulatory contexts where a human has to be able to explain what the code does and why.

And there's something deeper. AI agents don't truly understand the code they write. They're remarkably good at producing working software, but when something goes subtly wrong, you need a human who can reason about the architecture. For that, readability still matters.

Where I've landed

I think we'll see a split. Code that faces humans (libraries, public APIs, core domain logic) will keep something like traditional quality standards. But the growing mass of application code that gets written, modified, and sometimes entirely rewritten by AI agents? The old rules don't quite fit.

I'm not mourning SOLID. I'm watching the craft evolve. The principles I learned aren't wrong. They were right for their context. The context is changing.

The interesting question isn't whether AI will kill code quality. It's whether we're honest enough to admit that "quality" was always a human concept, and humans are no longer the only ones in the room.